Narrative AI Game Analysis

Automatically building a narrative game review instead of depending on engine evaluations

The Problem with Current Game Reviews

Most chess analysis tools today feel like having a backseat driver who is constantly yelling at you about what is going wrong. You get a stream of move-by-move commentary—“that was +0.3, now it’s +0.7, oops back to +0.2”—but no sense of where you’re actually going or why you took the scenic route to get there. Sure, the engine dutifully flags every inaccuracy and marks your blunders with stern disapproval, but what about the story of the game? What were you trying to accomplish in the middlegame? Did that pawn break actually serve a purpose, or were you just pushing pieces around?

The real frustration comes when you’re trying to improve as an adult player. You don’t need to know that move 23 was 0.4 pawns worse than the computer’s top choice—you need to understand why your position fell apart or how you missed your opponent’s tactical shot three moves later. Current analysis tools treat every slight evaluation swing as equally important, giving you a microscopic view when what you really need is the wide-angle lens. They’ll tell you about both players’ mistakes with the same clinical detachment, but as someone trying to get better, you don’t need a play-by-play of how your opponent failed to punish your errors. You need to understand your own decision-making process: were you following a coherent plan, did you spot the critical moments, and when things went sideways, how did you handle it?

Using Words, Not Evals

Here’s the thing: I’m a club player, not Magnus Carlsen. When I look at a position that’s gone from +0.4 to +0.7, I don’t immediately think “ah yes, I’ve improved my winning chances by roughly 12%.” I think “this feels a bit better than before, but honestly, who knows?” At the club level evaluations bounce around like a pinball—positions that are theoretically “equal” can flip dramatically with one tactical oversight or a poorly timed pawn push. The difference between +0.3 and +0.7 might matter a lot to a grandmaster squeezing every drop of advantage from a position, but for most of us, it’s noise until someone hangs a piece.

So instead of drowning in decimal points, I decided to translate those engine evaluations into something that actually makes sense to human brains: words. The same Stockfish engine still crunches the numbers behind the scenes, but now positions get labeled as “roughly equal,” “slightly better for white,” or “significantly worse”—categories that map to how you’d actually think during a game. I also track trends over time: is the position “relatively unchanged” over the last few moves, or has it “significantly improved” for one side? It turns out this approach has a nice bonus too—large language models are trained on, well, language, so feeding them descriptive phrases instead of raw numbers gives them much more to work with when generating insights about your game.

Where AI Can Help

Let’s be clear about one thing: AI is currently terrible at actually playing chess. There’s a funny video floating around where Magnus Carlsen plays blindfold against ChatGPT, and it goes about as well as you’d expect—ChatGPT starts confidently enough but eventually begins making illegal moves that would get you kicked out of a kindergarten chess club.

But here’s the interesting part: while AI can’t play chess to save its digital life, it’s surprisingly decent at understanding chess. Give Claude or ChatGPT a sequence of moves with some context, and it can actually write coherently about plans, themes, and strategic ideas. It might not be able to play brilliant tactical shot that wins material, but it can look at a series of moves and say something sensible like “white is trying to create kingside attacking chances while black is seeking counterplay on the queenside.” For top-level players, this kind of general commentary might be too superficial to be useful. But for club players like me? It knows enough chess concepts to provide genuine insights about what was actually happening in the game, especially when you feed it those descriptive (see above) position evaluations instead of raw engine output.

Plus, AI excels at one thing that traditional game review completely misses: taking a 40-move game and breaking it into logical chapters, identifying when one phase ended and another began, and explaining how the story of the game unfolded.

How My Project Works

The magic happens in a few straightforward steps that transform a raw chess game into something actually readable and useful. It starts simple enough: feed the system a PGN file, and Stockfish gets to work evaluating every single position. But instead of spitting out a bunch of “+0.73” nonsense, the system translates those evaluations into the descriptive language we talked about earlier—“white holds a slight advantage,” “the position remains roughly balanced,” “black’s situation has deteriorated significantly.”

Here’s where things get interesting. The system hands all of this contextual information to a large language model (LLM) and asks it to do something traditional analysis tools never attempt: break the game into logical chapters. Maybe the opening lasted 12 moves, then there was a 15-move middlegame battle for the center, followed by a tactical skirmish that decided the game. The AI looks at the flow of moves and evaluation trends and says “okay, this game naturally breaks into four distinct phases, and here’s why each one matters.”

For each chapter, I get three levels of analysis that work like a choose-your-own-adventure book:

The Big Picture: What was actually happening during this phase? Were you launching a kingside attack? Trying to trade into an endgame? Slowly improving your pieces while your opponent flailed around? This section captures the narrative thread that holds the moves together.

Key Learning Points: The practical takeaways that might actually help your next game. Things like “you rushed your attack before completing development” or “you correctly identified that trading queens would simplify to a winning endgame.” These are the insights you could theoretically apply in future games.

Deeper Analysis: For when you want to nerd out a bit more. This section gets more technical about specific move sequences, alternative plans you might have considered, or tactical patterns that emerged. Most of the time, the first two sections give you everything you need, but sometimes you want to understand the chess mechanics behind the story.

The whole process runs on Claude Sonnet, and honestly, I was bracing myself for a hefty API bill when I started this project. Turns out analyzing a complete game costs about ten cents worth of tokens—less than a gumball, and infinitely more useful than staring at evaluation graphs that look like a seismograph during an earthquake.

Example Output

Let me show you what this actually looks like in practice with a recent over-the-board tournament game I played. The contrast between traditional analysis and AI-powered game review becomes pretty stark when you see them side by side.

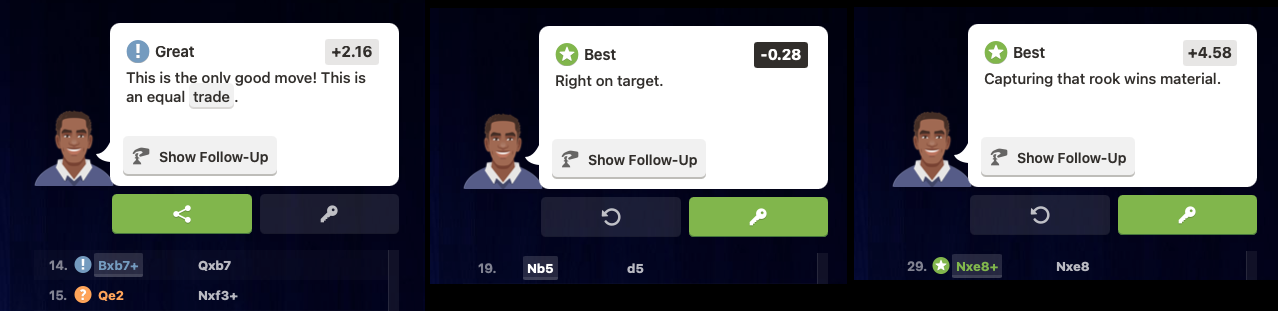

Here’s what Chess.com’s game review gave me for a few key moments:

Notice the pattern? Each comment focuses on a single move in isolation. “This is an equal trade” tells me nothing about why I wanted to trade pieces at that moment. “Right on target” is encouraging but doesn’t explain what target I was supposedly hitting. And “Capturing that rook wins material” is… well, yes, capturing rooks generally does win material.

Now here’s what my AI analysis provided for the same game phases:

From the “Balanced Minor Piece Middlegame” chapter:

White embarks on an active piece-play strategy, leveraging their knight’s mobility to create threats across the board… The critical turning point comes after several moves of jockeying for position when White plays Nf5+, initiating a tactical sequence. This knight fork forces Black’s king to move, allowing White to capture a pawn with Nxg7+. White continues the assault with Nxe8+, winning a rook for a knight.

Key Learning Points from the same section:

Knight mobility in open positions: White’s knight proves to be a powerful piece, making multiple threats from different squares (b5, a7, d4, f5, g7, e8). The lesson here is to keep your knights active and look for outposts where they can create multiple threats. Notice how White’s knight moves eight times in this sequence, each time with purpose.

Instead of just telling me I played a “best” move, the AI explains the entire tactical sequence, why it worked, and gives me a principle I can apply in future games. It connects the individual moves to the broader strategic picture.

The difference becomes even more pronounced when you look at how each approach handles the opening. Chess.com might flag a few moves as “excellent” or “good,” but my analysis provided this context:

Central Tension Management: White demonstrates good timing with the e4 advance. Rather than rushing this move early, White completes development first, castles, and only then challenges Black’s f5 pawn. This teaches us to prepare properly before creating tension in the center, especially against asymmetrical openings like the Dutch.

This explains not just that my moves were good, but why the sequence mattered and what principle guided the decisions. It’s the difference between getting a grade on a test and getting feedback that helps you understand the material.

The complete analysis runs several pages. You can see the full PDF output here which breaks down this 52-move game into six distinct chapters, each with strategic insights that would be nearly impossible to extract from move-by-move engine evaluation.

Wrapping Up

Building this chess analysis tool scratched an itch I didn’t even realize I had. For years, I’d dutifully run my games through engine analysis, nodding along as it told me move 23 was a mistake while completely missing why I fell behind in the first place. It felt like having a brilliant friend who could only communicate through cryptic numerical codes.

The real revelation wasn’t that AI could analyze chess—it was that it could translate chess into the language humans actually think in. Instead of drowning in evaluation decimals, I now get explanations like “you rushed your attack before completing development” or “this knight fork demonstrates how exposed kings become tactical targets.” These are insights I can actually remember and apply in my next game.

The project took about a weekend to build and costs pocket change to run, but it’s already changed how I review my games. Rather than skimming through a list of colored dots marking my mistakes, I actually read the analysis because it tells a story about what happened and why. The chapter-based breakdown helps me see patterns in my play that would be invisible in traditional move-by-move analysis.

Will this make me the next world champion? Probably not—I still hang pieces with alarming regularity. But it’s given me something more valuable than perfect tactics: a clearer understanding of my own thinking process. And in a game where most improvement happens between your ears rather than on the board, that’s worth more than knowing I missed a +0.3 advantage on move 15.

Another fascinating post. I would be interested in seeing how you feed the games into Claude, I saw you mention you just gave it the PGN. What was the prompt you used to have it give you such great feedback? I am going to try this with ChatGPT now. Thanks!